Collaborengine[1]: GenAI at the Mount Writing Centre

Clare Goulet, MSVU Halifax, Nova Scotia, Canada

Educators … must work collaboratively to foster environments that balance the benefits of AI with the development of critical thinking.

—M. Gerlich, “AI Tools in Society: Impacts on Cognitive Offloading and the Future of Critical Thinking”

Mount Saint Vincent University in Halifax, Nova Scotia is grappling with the swift emergence of generative AI in higher education, and its writing centre is in a high-stakes role where we’re both experiencing and ameliorating its immediate effects, mediating between student and assignment, student and professor, student and university policy. From the start, we chose to make our role educative, collaborating with partners to steer students to become more flexible, independent writers able to work with a range of tools within required boundaries, with transparency, and with academic integrity–and quickly. But recent evidence suggests we pause to develop an effective process. In all this, writing centres are thwacking a path where there is no precedent.

Like the majority of North American universities, departments and faculty determine AI use and boundaries in their classes, which can change even within the same class from assignment to assignment. What’s a student to do? How can we support student writers with this new set of boundaries every term as well as temptations, expectations, and consequences? Here’s a snapshot of what we’re doing in our writing centre, where we’re going, and how we’re getting there.

Beginquire

For us, cross-campus integration is key: the Mount Writing Centre has regular collaborative partners in the university’s Teaching and Learning Centre and its Library for pan-university, all-program, student and faculty educative and dissemination outreach. Our alignment for consistent approach and clear messaging is essential. Together with the cross-campus Writing Initiatives Committee, we share and take front-line experience into decisions on information and approach, workshops, campus education, up to recommendation for university GenAI policy decisions (though not setting policy).

Fall 2024 saw the first campus surge in out-of-bounds GenAI use by students in coursework (though not in writing brought to Centre appointments). In response, the Writing Centre ran a simple plain-language poster campaign (Figure 1) to meet an urgent student need. We had to fit the Mount’s AI approach, diverse prof uses or non-use of GenAI, writing tutor needs and boundaries, and the latest AI citation styles: simplifying to “What’s allowed”? “How do I Know?” “How do I cite?” and normalizing source citation, as for any other academic work.

Figure 1

Figure showing plain-language poster campaign for writing center

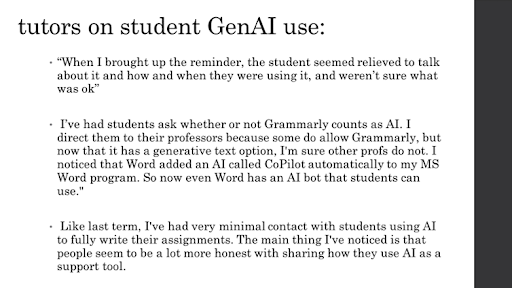

The aim was to make clear student responsibilities with a message of nonpunitive support. Our tutors work with what students bring to the table and redirect them toward independent writing if and as needed; we don’t report to their instructors and are fortunate to be supported by our Dean of Arts in this approach, making that a key message: “Our tutors are here to guide you if they see a problem, not to report on you . . . We can help check your assignment AI limits and show you effective options for independent writing.” Reported student reaction to this approach in our Writing Centre has been relief and (new) open discussion of AI with tutors (Figures 2 and 3).

Figure 2

Figure showing quotes from tutors on student GenAI use in the writing centre

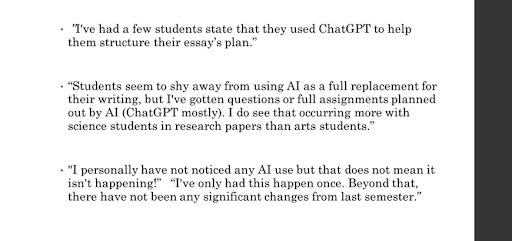

Figure 3

Figure showing quotes from tutors on student GenAI use in the writing centre

Softwariness

Accompanying GenAI into higher education are AI issues well known by its creators, proponents, and detractors alike—how racist, misogynist, colonial historical views are writ large and magnified exponentially in the giant data scrape of existing historical and contemporary material. Issues of data privacy persist and, within the tools, potential plagiarism, copyright and ongoing legal issues; sustainability of the business model; ownership or created work. Student practicalities include the cost burden of tool purchase, their software access and experience before arrival at university creating a potential wider gulf between privileged and vulnerable groups, and clumsy AI use leads to poor learning outcome or probation, dismissal, departure [2]. Writing centres are part of a wider world, and in deciding Writing Centre use I’m guided by questions: What is our aim in incorporating GenAI in sessions? Is this use academically and socially ethical? And—recommended to me by some developers of GenAI—What problem does this tool solve?

Just a few weeks ago, in January 2025, a series of qualitative and quantitative studies found overwhelmingly and consistent evidence that “higher AI usage is strongly associated with lower critical thinking abilities” (Gerlich, 2025, p. 16). While this finding can’t inform policy that writing centres don’t set, it does underscore continued need for independent writing in higher education as core work—particularly at foundational undergraduate levels—and continued need for tutors to support this critical thinking. A person’s academic writing is even more key than those of us at at writing centres already suspected: essential to critical thinking and cognitive development, heavy reliance on GenAI leading to “diminished decision-making and critical analysis abilities . . . reduced problem-solving skills, with students demonstrating lower engagement in independent cognitive processing” (2025, p. 6).

Methodapt

Evidence, not pressure, will guide our own writing centre’s approach this coming summer term in practical tutorial modes that emphasize student-generated conceptual approaches (human brainstorming) and share those benefits with them: “there should be an emphasis on developing students’ metacognitive skills to help them become aware of when and how to use AI tools appropriately without undermining their cognitive development” (p.24). So our writing centre has not yet incorporated GenAI into appointments, pausing to research and consider process because how you integrate AI matters—whether human-AI chess teams, medical research, or writing centers: what proves most effective is not the best tool but the best collaboration.

For now we’ll continue to press undergraduate students to generate their own rough drafts—ideas, connection, engagement—moving away from AI as so-called ‘brainstorming’ and toward its use as revision and refinement tool at later stages, with tutor guidance, practical and transparent.

Composervers – GenAI in Writing Centre sessions: first steps (summer 2025):

-

- Steering use after brainstorming after rough drafts (student-generated) to refine or shape, in line with existing academic iterative processes for analytic work.

- More use with upper year and grad projects and less for 1st and 2nd year foundational skill-building / cognitive capacity

- Use and teach in sessions for format and sorting: lists, index, tables, figure captions, appendices

- Align with professor boundaries by embedding tutors in classes with a GenAI academic assignment

- Offer AI-designated specialist tutors

In light of these issues and evidence, our Writing Centre aims to create by September 2025 a GenAI tutor session process that builds ability, not dependence. A place to begin.

Footnotes

- headings written with J. Simon’s Entendrepreneur: Generating humorous portmanteaus using word-embeddings. In Second Workshop on Machine Learning for Creativity and Design (NeurIPS 2018). Early draft model used with creator permission. ↩

- Even a Microsoft-funded whitepaper on moving AI into higher Ed is blunt about “legitimate concerns around data protection, use and misuse of intellectual property, algorithmic bias, academic integrity, and the ethical and responsible use of AI, Inequitable access, and the risk of broadening the digital divide” (D. Liu and S. Bates, 2025, . “Generative AI in higher education. current practices and ways forward”). ↩

References

Gerlich, M. 2025. The Human factor of AI: Implications for critical thinking and societal anxieties (January 07, 2025). Available at SSRN: https://ssrn.com/abstract=5085897 or http://dx.doi.org/10.2139/ssrn.5085897